Snapshot Ensembles¶

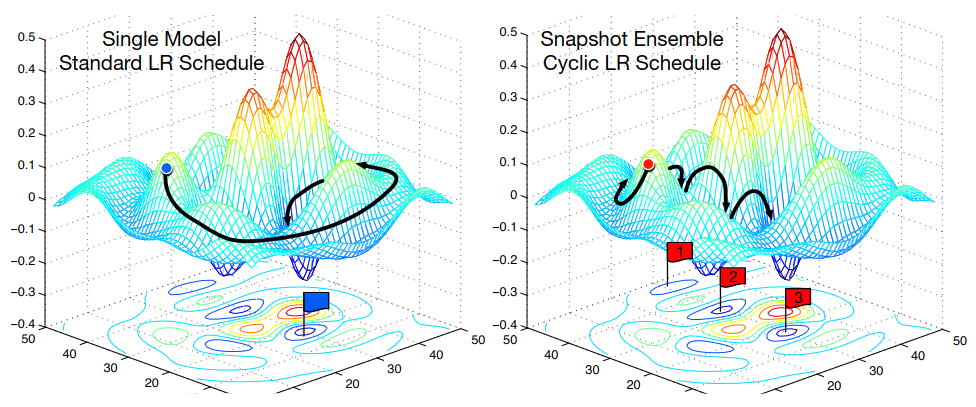

Training multiple deep neural networks from scratch can be computationally expensive. Snapshot Ensembling is an efficient temporal method where ensembles are constructed by saving checkpoints of a single model's weights throughout its training trajectory.

Snapshot Ensembles leverage a cyclic learning rate schedule that alternates between large and small values. The large learning rates encourage movement in weight space while the small rates promote convergence to accurate local minima. Model weights at these local minima are saved and treated as independent ensemble members.

The learning rate schedule is a shifted annealed cosine of the form:

where is the initial learning rate, is the iteration number, is the total number of iterations, and is the number of cycles.