Stochastic Weight Averaging¶

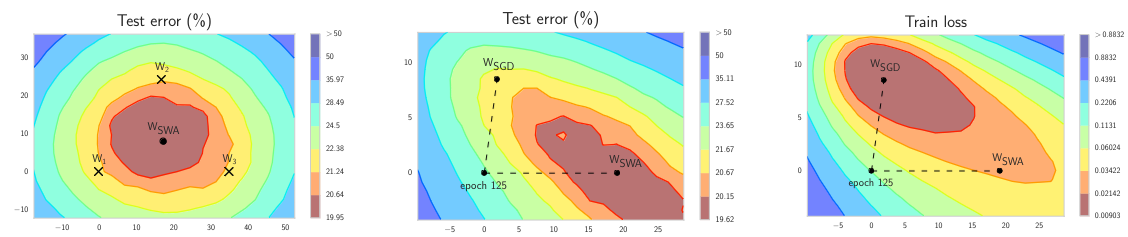

Stochastic Weight Averaging is a training procedure that averages the weights of several networks proposed by SGD in the final phases of training by using a learning rate schedule that encourages exploration of the same basin of attraction.

SGD tends to converge to the edges of these wide and flat regions in the training loss landscape where test distributions rarely align.

The learning rate schedule is a cyclical linear decay from to where the value at iteration is:

The aggregated weight average is updated during training.